The AI Engineering course I wish I had

Learn MCPs, agent monitoring, and how real AI systems actually work

Not sure what’s going on lately, but every time I open Substack or LinkedIn I keep seeing the same stuff:

“A complete list of resources to become an AI Engineer”

“The ultimate roadmap to master AI Agents”

“If I were starting in AI Engineering in 2025, this is the roadmap I’d follow”

And honestly, I could scroll through variations of this all morning 😅

Don’t get me wrong—I think it’s great that people are putting together roadmaps for aspiring ML/AI engineers. I’m working on a few myself!

But here’s the thing:

They’re a nice starting point, not a real path forward.

They rarely give you what you actually need to build a career in this field. That’s why all my content is hands-on.

I’m not just here to list out steps—I want to walk with you through the process. Help you build the technical skills that make companies actually pay attention.

That’s exactly what the course I built with

is about.It’s designed to show you what a real-world agent project actually looks like.

Not a bunch of disconnected notebooks—but a full system made up of multiple subsystems, written in different languages, all working together like they would in the real world.

Because if you're serious about working in AI, this is the kind of experience that counts.

Ladies and gentlemen, meet Kubrick 👇

💡 Kubrick is a collaboration between The Neural Maze and

. Check out the code repository to follow the full course syllabus and get access to all the resources and articles we’ve been putting together!

A project for real AI Engineers

When Alex and I kicked off this project, there were a few things we knew for sure.

We wanted it to be centered around video—not just another RAG app crawling through documents. Nope.

We wanted to take RAG and apply it to video. So yeah, video RAG.

We also wanted to show a real-world use case for an MCP server, plus bring in agent monitoring, prompt versioning, and observability.

This isn’t a 3-minute tutorial. It’s a deep, hands-on course built to take you from aspiring AI Engineer to high-performing one.

⚠️ So before you dive in … fair warning!

You’ll need to put in the work to get the most out of it. But trust me, it’s worth every bit of effort 😉

Here’s a breakdown of the components you’ll build (with the related articles)👇

Video RAG - The magic of Pixeltable

Ever wish you could ask a system super-specific questions about a video — like the color of someone’s shirt or the number of glasses on a counter — and actually get an answer?

That’s the concept behing Video-RAG (Retrieval-Augmented Generation for video), inspired by this “Video-RAG” research paper.

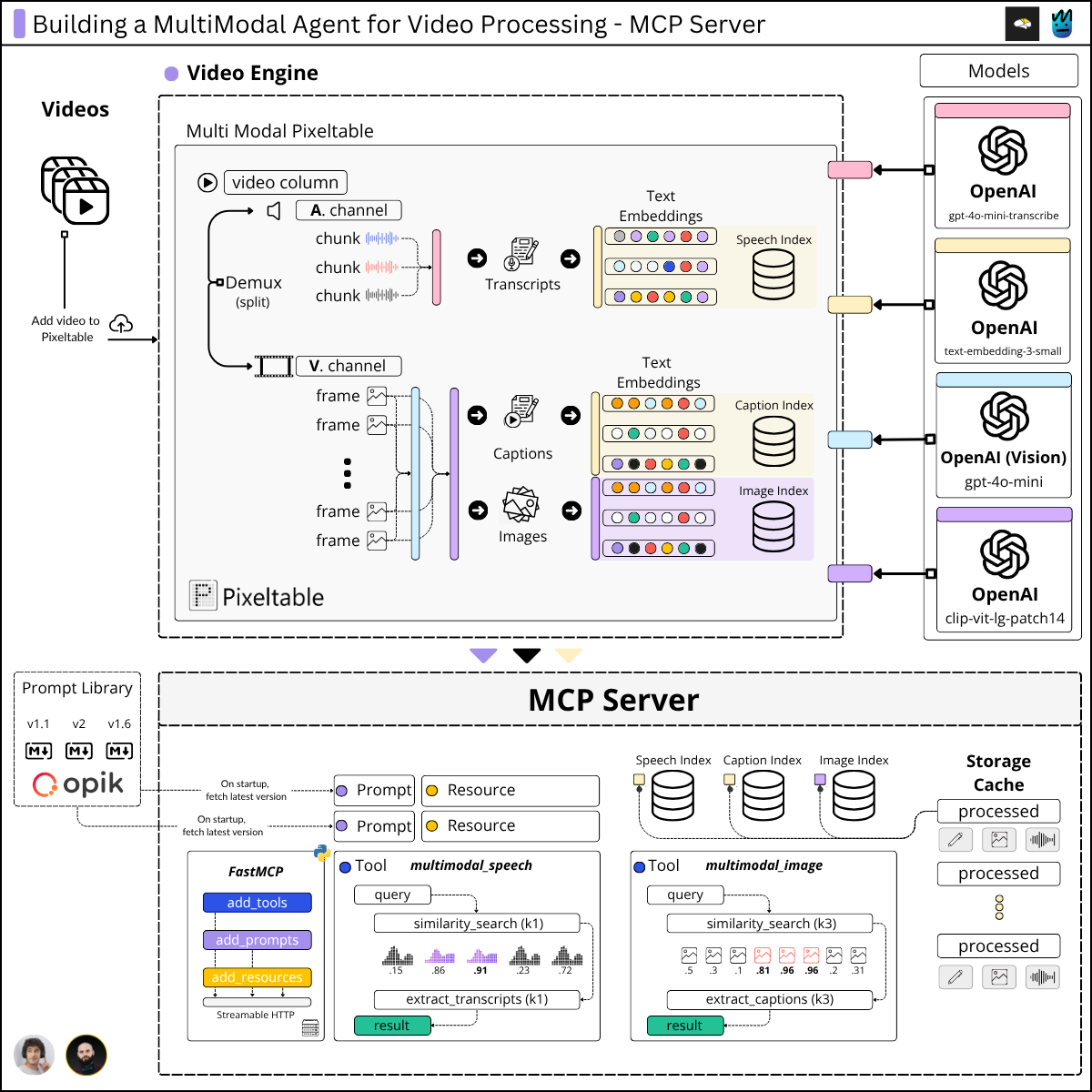

Instead of analyzing an entire video all at once, Kubrick breaks it down: audio gets transcribed and embedded, frames are sampled and embedded using CLIP, and captions are pulled in too.

This turns video understanding into a smart search problem.

And the secret weapon behind it all? Pixeltable

Pixeltable is a Python library built for projects exactly like this. It simplifies complex multimodal pipelines, handles things like frame sampling and audio transcription, and creates semantic vector indexes — all with just a few lines of code.

💡Bonus: it’s fast, efficient, and packed with features like caching and parallelization.

Read the full article here 👇

An MCP Server for Video Processing Tools

An MCP Server for Video Processing? 😯

Yep, you read that right.

After running the Video RAG pipeline, we ended up with three embedding indexes—transcripts, captions, and frame data. All that juicy information just waiting to be put to work.

So… how do we use it?

By building MCP Tools that let the Kubrick Agent do its thing—autonomously finding frames, pulling clips, or just grabbing whatever relevant insights it needs from the video.

In this lesson, you’ll get hands-on with FastMCP.

Creating resources, exposing prompts, and defining tools—all in one place.

Check out the full article here 👇

MCP Agent, Agent API and Observability Layer

Now that the MCP Server is up and running, it’s time to put it to good use with the Kubrick Agent.

This final section is split into two lessons.

The first one walks you through creating an MCP Agent from scratch—no agentic frameworks involved.

You’ll learn how to build out the agent logic step by step, hook up an MCP Client, and translate the MCP tools into your LLM provider’s format (we’re using Groq here).

But of course, we need a way to expose our Agent to the world—or more specifically, to the UI we’ll be using to interact with Kubrick.

That’s what the last lesson is all about: building the Agent API and layering in observability with Opik so you can actually see what’s going on under the hood.

That was a fun project, don’t you think?

I mean: Video + MCP + Agents. What could possibly go wrong? 😍

Before I wrap up, just two quick things:

First, don’t forget to check out the

if you want more resources on the project. All the articles are listed in the GitHub repo, so you’ve got everything in one place!Second, let’s talk about The Neural Maze and what’s coming next.

Because after summer… things are going to change.

But don’t worry—it’s all for the better.

I’ve been thinking hard about how to bring more value to you all, and I think I’ve finally cracked it.

I’m starting a community.

Built for real builders, by real builders.

Think: guided roadmaps, weekly Q&As, more hands-on courses, more videos.

A space away from the noise. A space to grow into the kind of ML/AI engineer companies fight to hire.

Stay tuned, and have an awesome Wednesday! 👋

Finally, a course that goes beyond resource dumps and shows real-world integration

I was waiting for that! 🤩