After 35 written posts on Substack, I’m trying something new:

🎥 A video post!

To be honest, I’ve been having a lot of fun recording for my YouTube channel, so I figured:

I’ve got the mic, the camera, and the skills to shoot and edit so …. why not bring some of that video energy here too? 🤔

Which brings us to today’s post (or should I say, video?)

But before you hit play, here’s a quick rundown of what I’ll be covering, plus a few links to previous lessons and extra resources that’ll help you get the most out of it.

Let’s go! 👇

Before you play the video …

⚠️ This post builds on the theory and code covered in previous ones, so be sure to check them out if you haven’t already!

In the video, I’ll be sharing my screen and walking you through the work I’ve been doing with

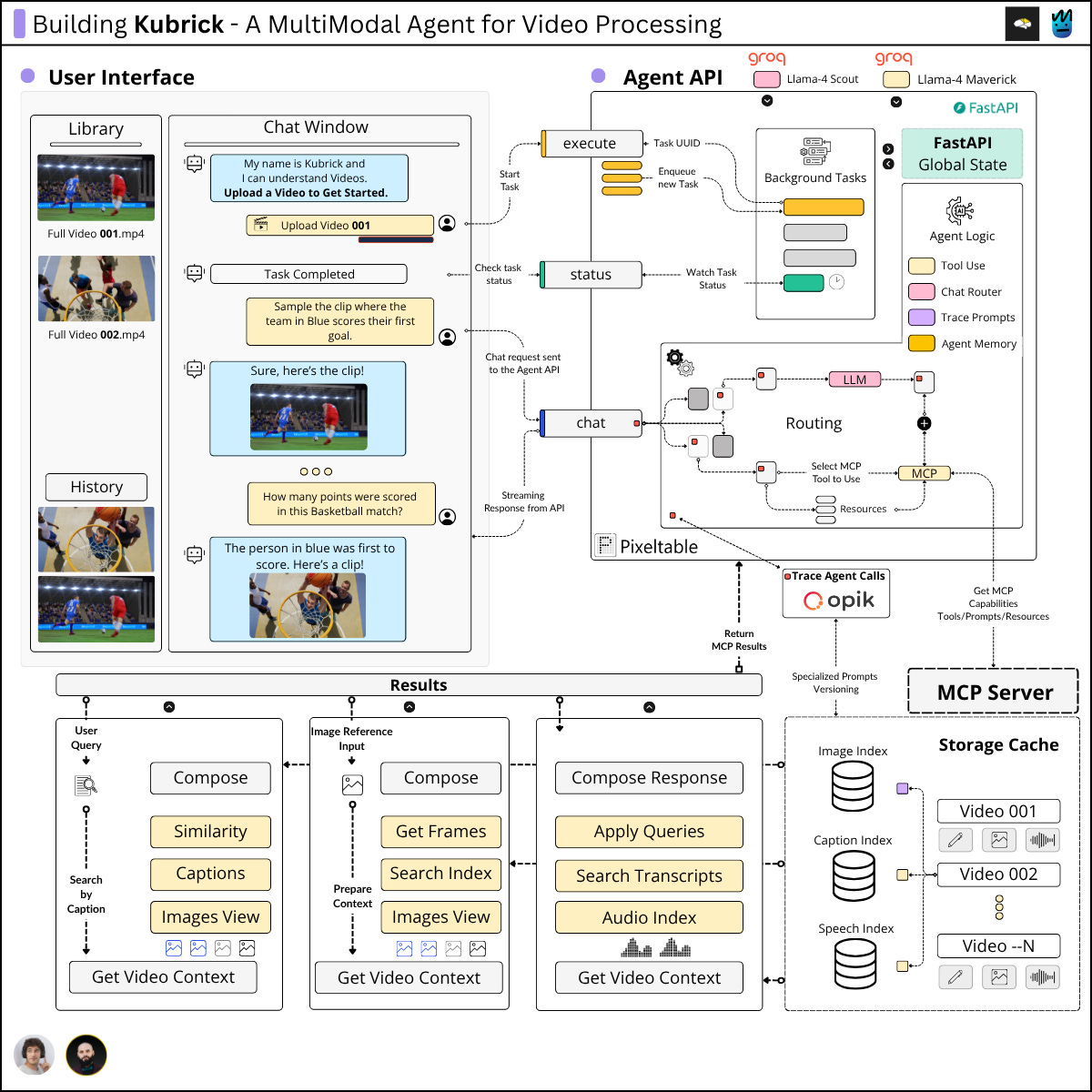

on how to properly monitor Agent APIs for our Kubrick open-source course.In particular, we’ll cover two key components: the Agent API and the Agent Observability layer.

You can check the api.py, containing the FastAPI implementation, in this location.

We are using Opik as the main tool to build the Agent Observability layer, specifically through the opik.track functionality.

The code I’ll be walking through in the video is part of the GroqAgent implementation, that you can find here.

Now, you can go up again and click the play button! ☝️

Let me know what you think of this new format (I’d love to hear your thoughts)

See you next Wednesday! 👋

Why is this course free?

This course is free and Open Source - thanks to our sponsors: Pixeltable and Opik!