How we built an MCP Agent from scratch

No magic. No fluff. Just an MCP Agent that actually works.

Ah Linus! Always ready with a line that makes us laugh and feel slightly called out.

Well, swap “device drivers” for “Agents” and you’ll know exactly where my head’s at.

It’s 2025, and sure, we’ve got CrewAI, n8n, and a dozen other shiny tools.

But sometimes, you just want to get your hands dirty and build something real, start to finish.

So that’s the vibe: a bit nostalgic, a bit stubborn … and 100% builder mindset.

In this article, I’ll show you how

and I did exactly that with our Kubrick Agent — a fully functional agent, built from scratch, that talks directly to our Video Processing MCP Server (if you’ve been following along, you know the one).No shortcuts friend. Let’s get into it 👇

The Kubrick Agent

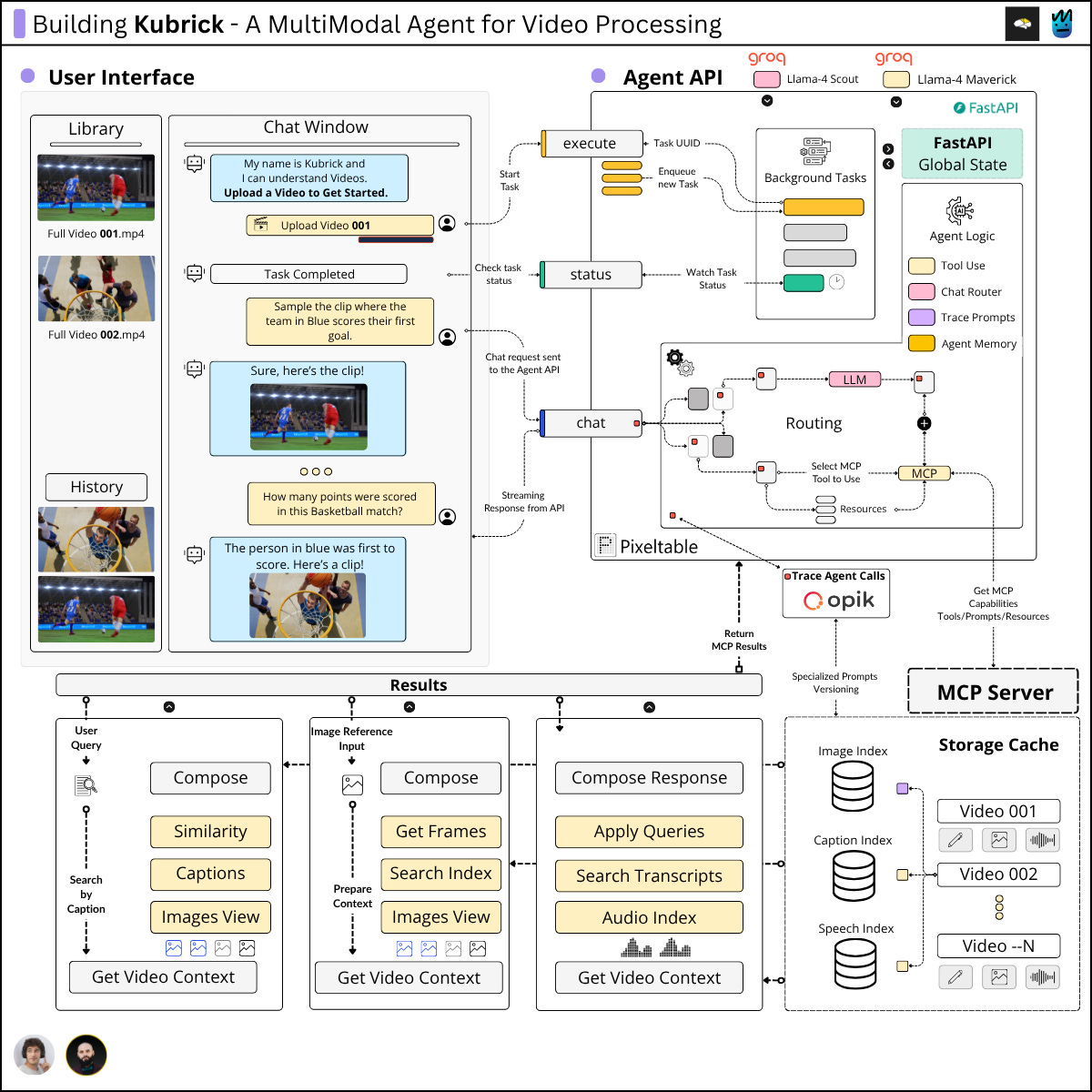

I know that diagram might look a little scary at first, but don’t sweat it — we’re going to break it all down step by step, starting today and wrapping it up next week.

💡 Today’s spotlight: the Agent implementation!

So, what makes up our Agent (or MCP Agent, if we’re being fancy)?

It’s got four key parts:

Core agent logic - This is the brains of the operation. We’re defining how it routes tasks, uses tools, and does its thing (all from scratch). No agentic workflows here, just Groq API calls!

Memory layer - Agents need to remember stuff, right? For short-term memory, we’re using Pixeltable to keep things persistent.

MCP Client + MCP Tool management - We cooked up an MCP client so our agent can connect to the tools and prompts living in the MCP Server.

Agent tracing - A peek behind the scenes to see what the agent’s up to. We’ll cover this bit next week!

Alright, let’s see how the core logic works.

Agent Core Logic

When it comes to the core agent logic, there are two classes in the source code you’ll want to pay extra attention to: BaseAgent and GroqAgent.

GroqAgent is just a subclass of BaseAgent, but it’s where things get interesting. Here’s a quick look at the chat method inside GroqAgent:

This method takes in the user’s message, plus a video_path and image_base64 if those are provided. From there, it runs through four major steps:

Routing: First, it figures out if any tools are needed to answer the user’s query.

Tool use: If tools are needed, it calls

_run_with_tool— this is where the agent decides which tool to use from the MCP Server.Simple response: If no tools are needed, it calls

_respond_generalto generate a straightforward answer instead.Memory storage: Finally, it saves both the user’s query and the assistant’s response in a Pixeltable table.

More on that last step in the next section 👇

Pixeltable Memory Layer

Alright, let’s talk about that memory piece.

Once the Agent generates a response — whether it uses a tool or not — it needs to store what just happened. That’s where the Pixeltable Memory Layer comes in.

In our case, we’re using Pixeltable to persist (just) the short-term memory. Every user query and the Agent’s response get logged into a Pixeltable table.

💡 This makes it easy to look back at interactions, debug issues, or just keep the context for future chats.

It’s simple but super handy. It also sets the stage for more advanced memory strategies down the line if we need them.

MCP Client + MCP Tool management

Just like Cursor or Claude Desktop, our Kubrick Agent needs an MCP Client to hook into the Video Processing MCP Server.

Thanks to FastMCP, this is embarrassingly easy.

Seriously, take a peek at the code for the BaseAgent and you’ll see how straightforward it is create an MCP Client using this library:

Apart from the MCP Client, we also need a Tool discovery method. This handles fetching all the tools from the MCP Server and translating them into the format that Groq expects.

If you’re curious about how that works under the hood, check out the GroqTool class — it’s responsible for this transformation logic and makes sure the tools are ready to roll when the Agent needs them.

With the tool discovery and this tool translation logic in place, the Groq Agent would never suspect it’s actually getting its tools from a remote MCP Server! 😉

It all just works seamlessly behind the scenes.

📌 This article is just a quick intro to everything you’ll find in the Kubrick repo. I encourage you to dive in — try configuring it yourself, tweak it, improve it, or even break it!

That’s the best way to really learn how it all works.

Feels so good after a solid session of building from scratch!

Better than yoga. Way better than CrossFit.

Just a good cup of coffee and a multimodal agent, built from the ground up.

But hey, it’s time for me to say goodbye and get back to work. Remember — next week I’ll be covering Agent Monitoring and the Agent API. Plus, Alex has a top-quality article lined up for us this Saturday in

.The Neural Bros never stop building! 🤣

We’re trying to be the antidote to all those AI influencers who talk too much but build too little. Less hype, more hands-on.

See you next Wednesday, builder 🫶

Amazing as usual,

I have one question please about how pixeltable is storing the data is it in local directories

does it have a cloud server for deployment , any resources to read on that ?

awesome! such a far cry from today's announcement from Google:

"For developers, the state of "flow" is sacred. It’s that magical space where your ideas translate into code almost effortlessly. The biggest enemy to this flow is friction— the reading of documentation, the framework selection, and the context-switching that pulls you out of the creative zone" and goes on how loading everpotent llm-full.txt does the magic.

In other words - just mindlessly f*ng throw in the kitchensinc all you can think of and ADK will do its magic. WTF?!

https://developers.googleblog.com/en/simplify-agent-building-adk-gemini-cli/

Thank you, amigo, for sticking with your agenda of helping your audience to become *critical and responsible* thinkers, and not like headless chicken per Google....