Your first Video Agent. Multimodality meets MCP

Master the basics of multimodal video agents in our new hands-on course.

I’ve been hinting that something’s been cooking … and it’s finally ready to serve.

Big day, builders! 🍲

I'm excited to officially launch a brand new hands-on course, in collaboration with

, from . Drumroll, please …Introducing Kubrick, the MCP multimodal agent for video processing!

Let’s gooo.

So, what’s this course all about?

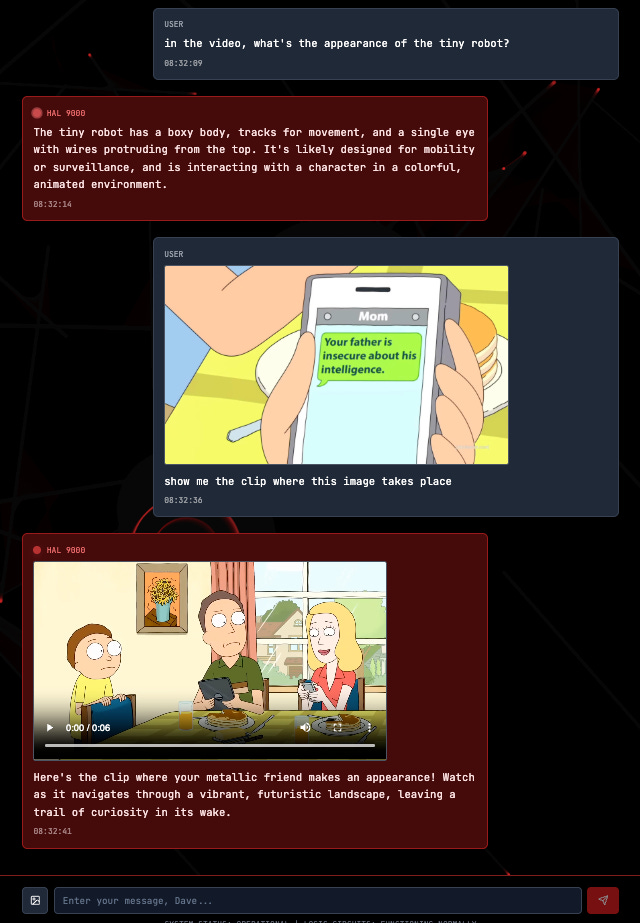

Besides the fun Kubrick reference and the HAL 9000 vibes… what is a Video Agent, really?

That’s exactly what this course is here to unpack.

But since this is just the intro (you could even call it Lesson 0), we’re keeping things light.

Today, I want to give you a clear picture of what Kubrick actually is, what it can do, and how it works—at a high level. Over the next few weeks, we’ll dive into each part in detail.

But let’s not get ahead of ourselves …

First, here’s what Kubrick can do 👇

Kubrick, the basic functionalities

When Alex and I decided to team up on a hands-on course, we immediately agreed it had to be “video-focused”.

Why?

Because there just aren’t many (if any) courses out there about video agents—and we thought it was time to shed some light on this fascinating space.

Here’s how it works: whenever you upload a video to the Kubrick system—and after it’s been fully processed (think: embeddings created, frames captioned, indexes built…)—the video becomes instantly available within the agent’s context.

What does that mean?

Well, with the right tools, Kubrick can:

Answer specific questions about the video (e.g. “What’s Morty’s T-shirt color?”)

Clip scenes based on a user query (e.g. “Show me the clip where HAL says ‘'I’m sorry Dave, I’m afraid I can’t let you do that’”)

Clip scenes based on an image

We’ll dive into how all that works next week—but for now, those are the core features you can expect.

Now that you’ve got the big picture, let’s break down the three main components that make this system tick.

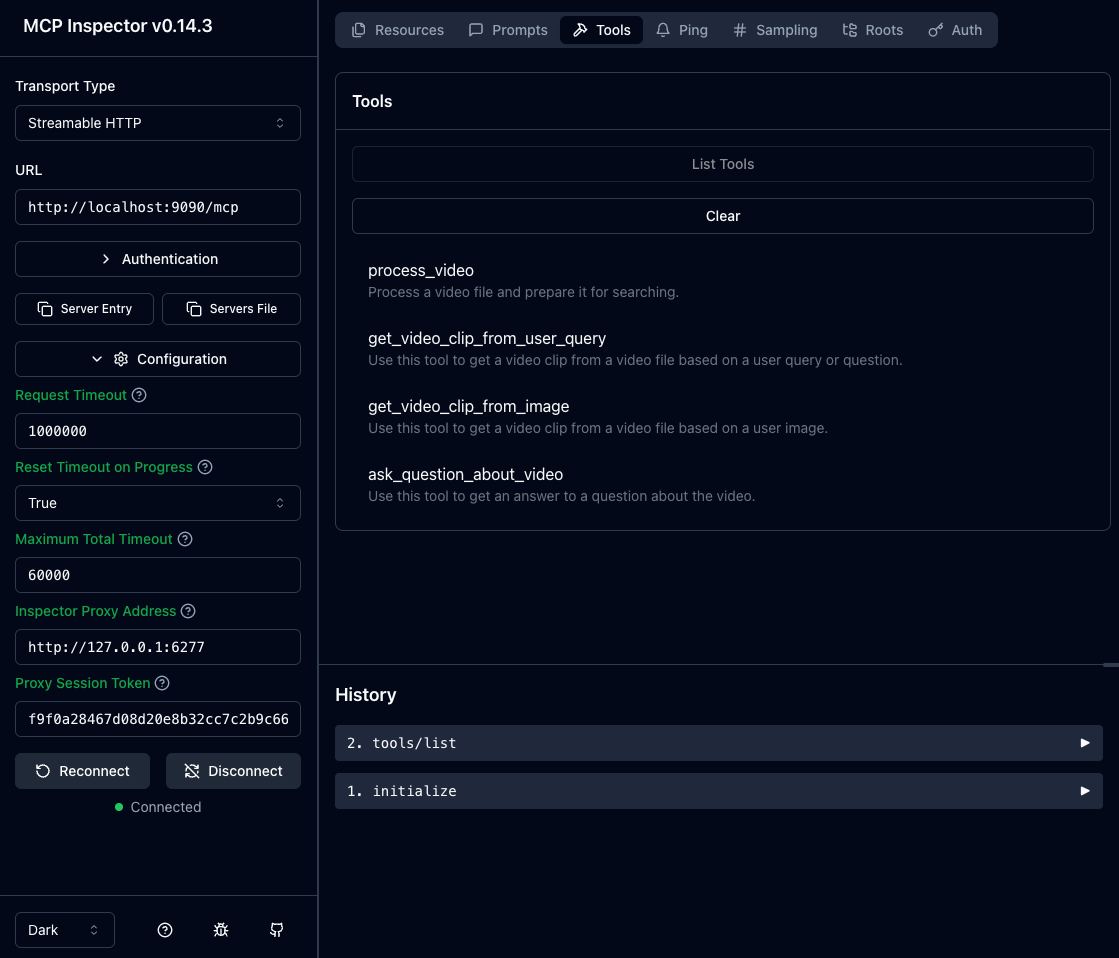

First component - An MCP server for video processing

There are plenty of “MCP projects” out there that stop at hooking up an existing MCP server to something like Cursor or Claude Desktop.

And hey, that’s definitely useful—but it’s not enough if you really want to understand how to build a production-ready MCP server.

So, for Kubrick, we built a dedicated MCP server from scratch using FastMCP. All the tools, resources and prompts live right there.

Let’s start with the tools— the core of Kubrick’s video processing power.

Building video processing Tools with Pixeltable

Kubrick has 4 available tools:

process_video - Processes a video file and prepares it for searching

get_video_clip_from_user_query - Gets a video clip from a video file based on a user query or question

get_video_clip_from_image - Gets a video clip from a video file based on a user provided image

ask_question_about_video - Gets an answer to a question about the active video

We’ll explore each tool in the coming weeks, but for now, here’s the key takeaway: every tool under the hood is powered by a library called Pixeltable.

Pixeltable handles incremental storage, transformation, indexing, and orchestration for multimodal data—which made it a perfect match for Kubrick, since we’re working with video, images, and audio.

Instead of hand-coding logic to extract audio, split video into frames, or turn each frame into an embedding, Pixeltable’s abstractions and built-in functions take care of it all.

Super efficient, super clean 👌

So that covers the tools. Now… what about the prompts?

Prompts - Opik versioning

Another key difference from most MCP projects?

We’re not just exposing tools in the MCP server—we’re also defining our prompts there.

These prompts are fetched by the agent that lives in the next component (API), and we’re versioning them using Opik, so we can iterate and improve over time with proper tracking.

Now that we’ve covered the first component, it’s time to move on to the second: the Agentic API.

Second component - API, Stateful agents and MCP Clients

This component is a bit closer to some of my earlier projects—like PhiloAgents. At its core, it’s a FastAPI app that exposes our agent (Kubrick) to the outside world.

But there are a few things about our implementation that I think you’ll find especially interesting:

We’re building the agent from scratch, so no frameworks. We are also defining our FastMCP client and translating MCP tools into Groq tools.

We’re creating stateful agents with Pixeltable as the persistence layer.

We built a custom Opik observability layer from the ground up—defining traces, logging… the whole thing.

I’m really excited to walk you through how to build this kind of agent from the ground up.

We learned a lot about how MCP connections really work, and I think you will too!

Third component - a HAL 900 inspired UI

Last but not least—even though we won’t be covering it in the course (since the focus isn’t on frontend)—we needed a solid UI to bring Kubrick to life.

That’s the frontend you’ve been seeing in the screenshots I’ve shared. We were aiming for that HAL 9000 vibe, and we think we landed it 🤣

We also built in a video library, so you can browse all your processed videos and choose which one to set as active.

Simple, clean, and functional.

And I think that’s enough for today!

Consider this article the appetizer—the real fun starts in the weeks ahead.

We’ll be digging into MCP servers and clients, Pixeltable-powered video pipelines, custom agent observability, and more.

Hope you’re as excited as we are, because there’s a lot of cool stuff coming your way!!

Next Wednesday, we’re jumping into Pixeltable and the full video processing pipeline.

Until then…

Happy building! 👋

![kubrick_ai_demo.mp4 [video-to-gif output image] kubrick_ai_demo.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!UHfQ!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0cf959bb-44ab-4ca5-9995-92b8fadafcea_800x480.gif)

Let's gooo! 🚀🚀 Really excited about this one!

Can’t wait. Let’s goooo 🔥