A hands-on project to master multimodal agents

For those looking to integrate vision and speech into their agentic workflows

Every AI engineer falls into one of two groups.

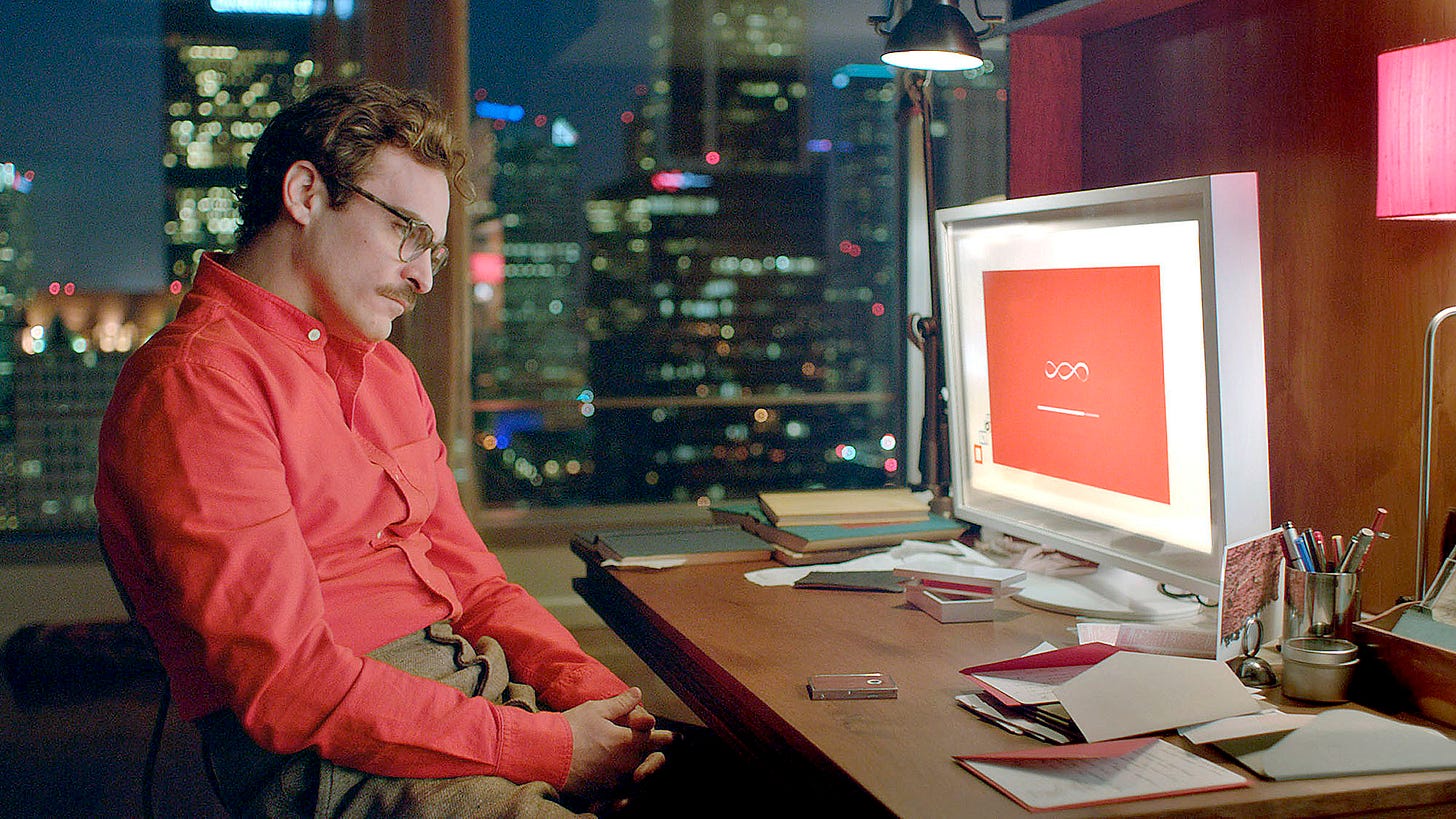

If you’re in the first, you recognized the image above instantly—and I’ve definitely got your attention.

If you’re in the second, you’re probably just wondering why Joaquin Phoenix is staring at a screen (you do know who Joaquin Phoenix is … right?). And maybe asking yourself what’s so special about this scene.

In that case, feel free to stop reading and go watch Her.

Okay, I’m kidding 🤣

You can keep reading. I just take any chance I get to bring Her, since it’s one of my favorite sci-fi films of all time.

Now, back to the point.

Today’s article is about something that started as a tiny side project. Nothing serious at first. But then it started to grow … and grow …

And now? That little idea has turned into the most successful open-source course I’ve ever launched on

!Ladies and gentlemen … meet Ava 👇

Like the other open-source courses on The Neural Maze, this one includes written and video lessons, along with a complete code repository. You can use it to better understand the concepts, or tailor Ava to your own specific use cases.

We’d love to hear what you build!

👉 Check out the code repository here to access all the resources, articles, and videos!

Ava, a multimodal agent that uses WhatsApp

I met

after watching his video about Samantha OS, where he showed how to build an assistant (yes, like in Her) using OpenAI’s real-time API.The moment I finished that video, I knew I had to build something with him. So I reached out, we hopped on a call, and within minutes, we had an idea:

Let’s build an AI Companion: Ava.

And yes, the name is a nod to Ex Machina. But don’t worry, no murder-y instincts this time 😄

The idea was simple.

Sure, there are tons of tutorials out there on how to build chatbots or assistants. But 99% of them? Super shallow. Boring. Robotic. Cold.

We wanted to do something different—build an AI assistant that actually feels human. One that can see, hear, have a personality, manage its own schedule, and most importantly … talk to you not through a clunky Streamlit UI, but through something natural and intuitive (like WhatsApp).

Fast forward a few months: the code is done, the articles are written, the videos are recorded. And the course? It’s structured into 6 solid lessons that cover the whole journey.

Let’s break them down! 👇

Lesson 1: Project overview

This first lesson is all about getting the full picture. We’ll walk you through the tech stack we’re using—Groq, LangGraph, Qdrant, ElevenLabs, and TogetherAI—and break down the different modules we’ll be building along the way.

By the end of it, you’ll have a clear idea of how everything fits together and what we’re aiming to create.

Lesson 2: Dissecting Ava’s brain

Don’t worry—the title’s just a metaphor. Ava’s totally fine 🤣

In this lesson, we’re exploring LangGraph, starting with the fundamentals (nodes, edges, state, etc.) and moving into more advanced workflows, including the one behind Ava’s “brain”.

Lesson 3: Unlocking Ava’s memories

In this lesson, we’ll implement both short-term and long-term memory systems for Ava.

Short-term memory—essentially the chat history of your interactions with Ava—will be stored in SQLite. For long-term memory, we’ll let Ava decide what’s worth remembering. She’ll extract relevant details from your conversations and store them as embeddings in Qdrant.

So don’t be surprised if she remembers you went to the dentist three months ago.

Lesson 4: Giving Ava a voice

Voice agents are here to stay, and Jesús and I are really bullish on this technology. That’s why we decided to include a voice pipeline in the course—made up of two core systems:

Speech-to-Text (STT) using Whisper

Text-to-Speech (TTS) using ElevenLabs custom voices

Ava can not only understand your voice notes … she can reply with her own.

Lesson 5: Ava learns to see

Now that Ava can talk and listen, there’s one crucial piece missing … she needs to see 👀

That’s why in Lesson 5, we’ll show you how to integrate Vision-Language Models (VLMs) into your agentic workflows. Ava will be able to interpret visual content from input images (like a photo of you sipping a mojito 🍋🟩) and even generate her own images using diffusion models.

Pretty cool, right?

Lesson 6: Ava installs WhatsApp

And here we are: the final lesson.

Lesson 6, where Ava finally buys a phone, installs WhatsApp, accepts all the license agreements, and starts chatting with you.

Okay… not exactly. In reality, we’ll walk you through creating a Meta App, connecting it to your agentic application, and setting up the full pipeline.

But hey, the other version sounded way cooler 🤷

Bonus Lesson: The Full Course

For those of you who are definitely a little crazy (and I love you for that), Jesús and I put together a 2+ hour deep-dive video covering every lesson in detail.

So if you’re feeling ready … go ahead and give it a shot!

Ava has been one of my favorite projects so far, and the response from the AI community has been incredible. That’s why Jesús and I have been thinking …

What if we built something again?

Well, just a heads-up: the conversations have already started, and we’re officially working on Ava 2.0. This time? Not just WhatsApp agents, but phone call agents ☎️

And of course, articles, videos, and code. As always.

We hope you’re as excited about this as we are. I’ll keep you posted.

See you next Wednesday, builders! 🫶

I want to learn

This is mind blowing