Deploying a Multimodal Agent on AWS Lambda

The ultimate LangGraph Workshop: Part 2

In the previous article, we learned the fundamentals of LangGraph and explored how to create a Telegram Agent directly from Google Colab.

Today, we’ll take that project one step further: we’ll deploy our multimodal agent on AWS, using AWS Lambda Functions, a serverless solution that lets us forget about managing servers, scaling, or maintaining infrastructure.

Project Overview

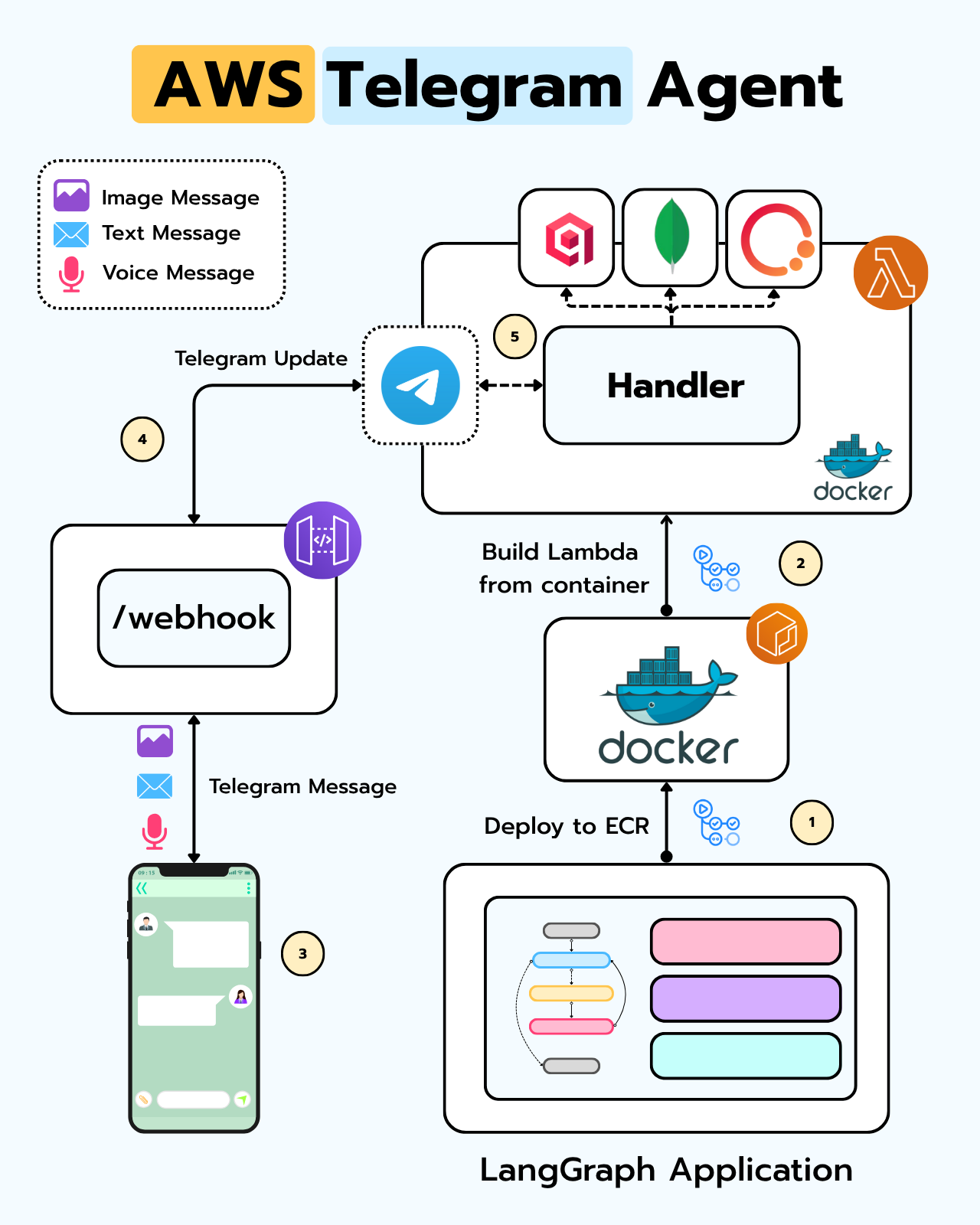

Before diving in, it’s important to understand the overall architecture. In the following video, you’ll find an overview of the different components involved in this deployment:

As you saw in the video, the workflow we’ll follow looks like this:

Deploy the code as a Docker image and publish it on Amazon Elastic Container Registry (ECR) — AWS’s service for hosting Docker images.

Create an AWS Lambda Function that runs this Docker image as a container.

Set up an API Gateway to expose the Lambda function to the internet, allowing Telegram to send updates via a webhook on the

/webhookroute.Connect your Telegram Bot to this API Gateway. Once connected, your agent will be ready to operate in production.

This is just a summary, the diagram shown in the video contains many more details that we’ll explore step by step throughout this article.

Keep reading with a 7-day free trial

Subscribe to The Neural Maze to keep reading this post and get 7 days of free access to the full post archives.