When we first explored the Reflection Pattern - check Lesson 1 of the series - we believed our agents were unbeatable. An agent that reflects on its own output, I mean, can we improve that? 🤔

The answer is yes! Actually, the Reflection Agent has a significant weakness. Consider this: what happens if you ask this agent about NVIDIA’s stock price right now? Or yesterday’s temperature in Madrid?

As you might already know, the information encoded in the LLM’s weights is not enough to provide accurate, fresh and context-specific answers to these questions.

That’s why we need to equip our agent with ways to access the outside world 🌍

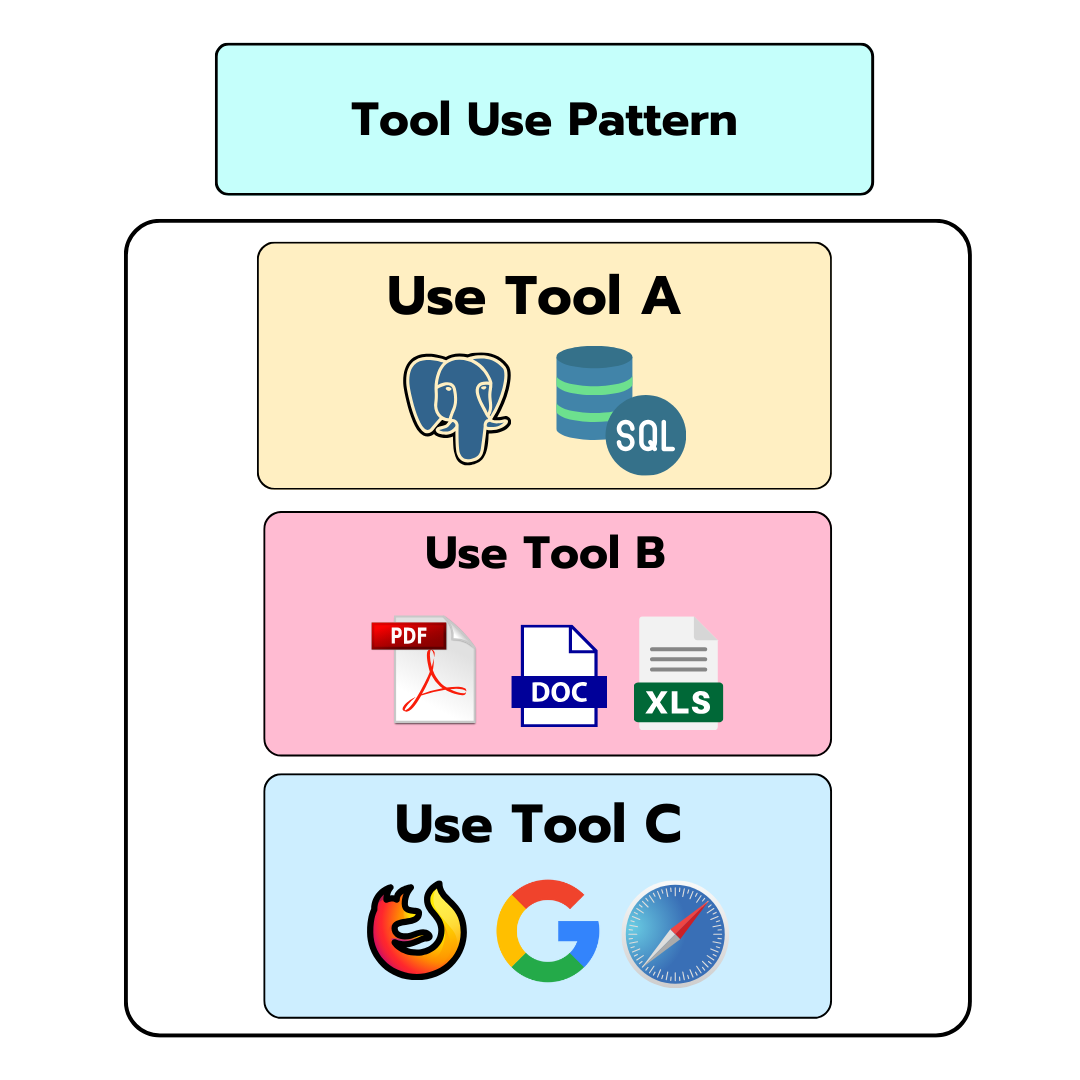

This is were Tools come in. At their core, tools are like functions the LLM can call to enhance its capabilities. And this brings us to the focus of the second pattern: the Tool Pattern.

In this post, you’ll learn how tools work and how to build them from scratch using Python and Groq LLMs.

Ready? Let’s begin! 🧑💻

You can find the code for the whole series on GitHub!

Building a Tool from Scratch

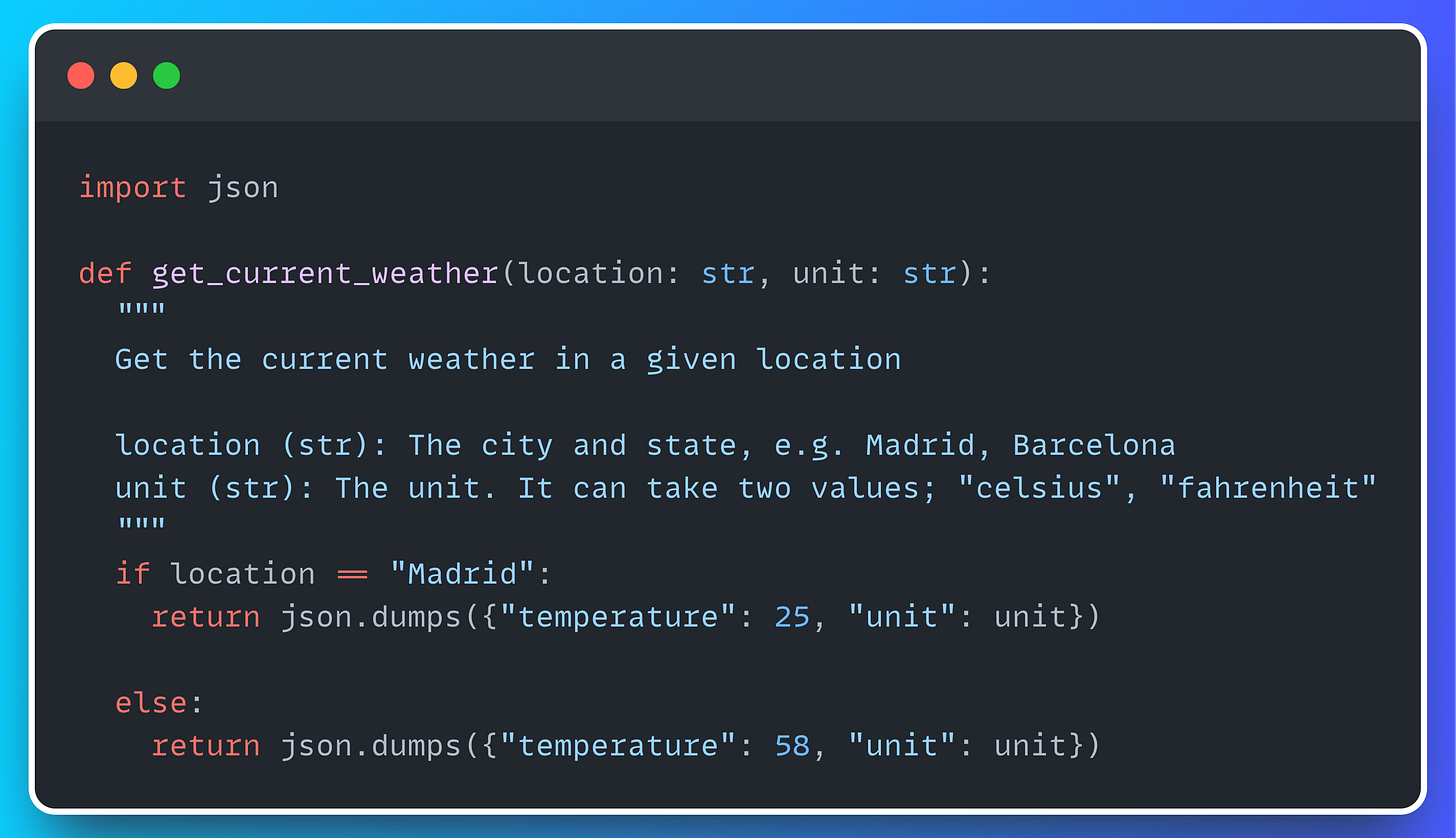

Let’s start with the basics. To begin with, take a look at this function 👇

Simple, right? Embarrassingly simple Id’ say … but the question is: How can we make this function available to an LLM?

A System Prompt that works

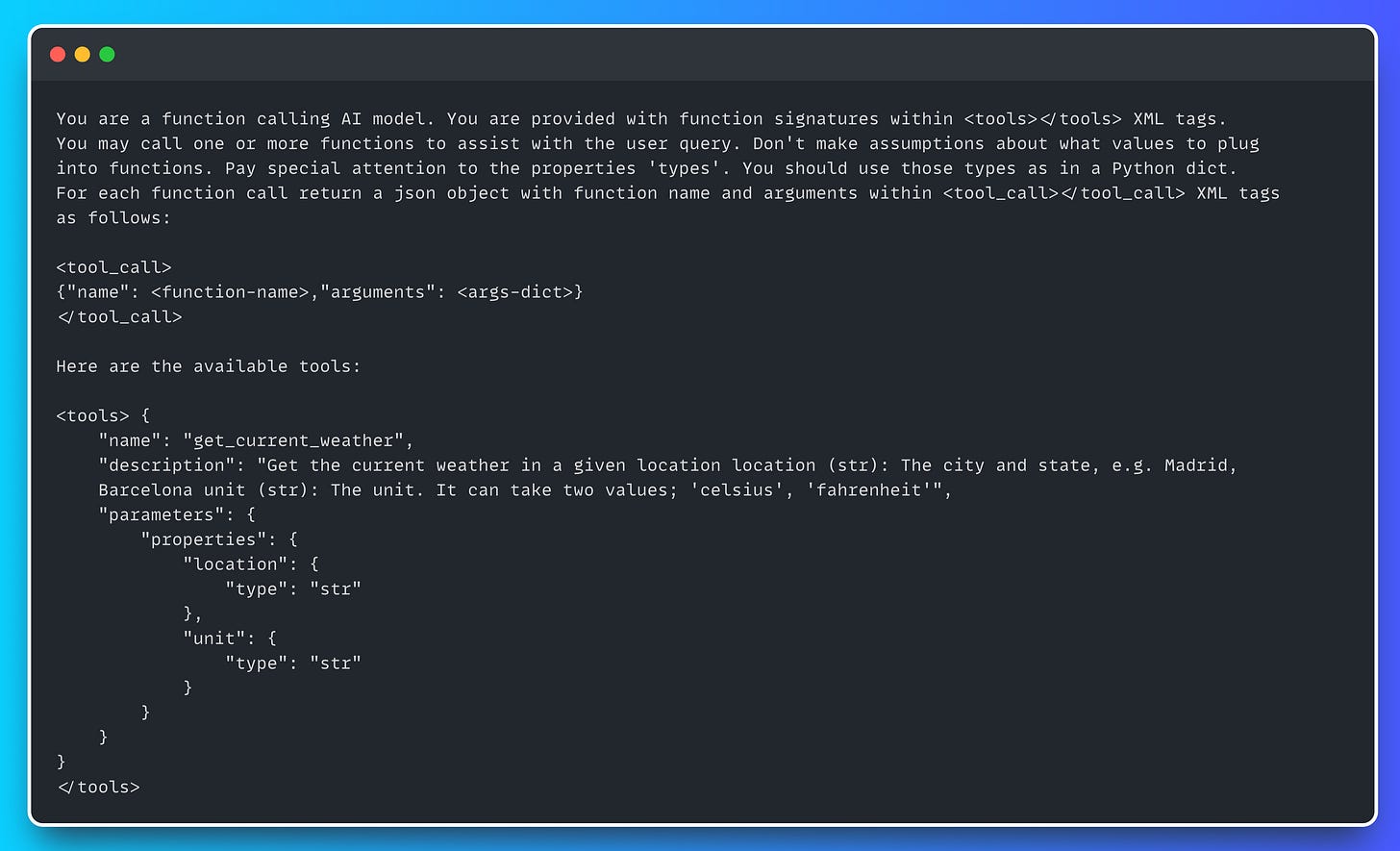

For the LLM to be aware of this function, we need to provide some relevant information about it in the context. I’m referring to the function name, attributes, description, etc. Take a look at the following System Prompt.

As you can see, the System Prompt enforces the LLM to behave as a function calling AI model who, given a list of function signatures inside the XML tags will select which one to use.

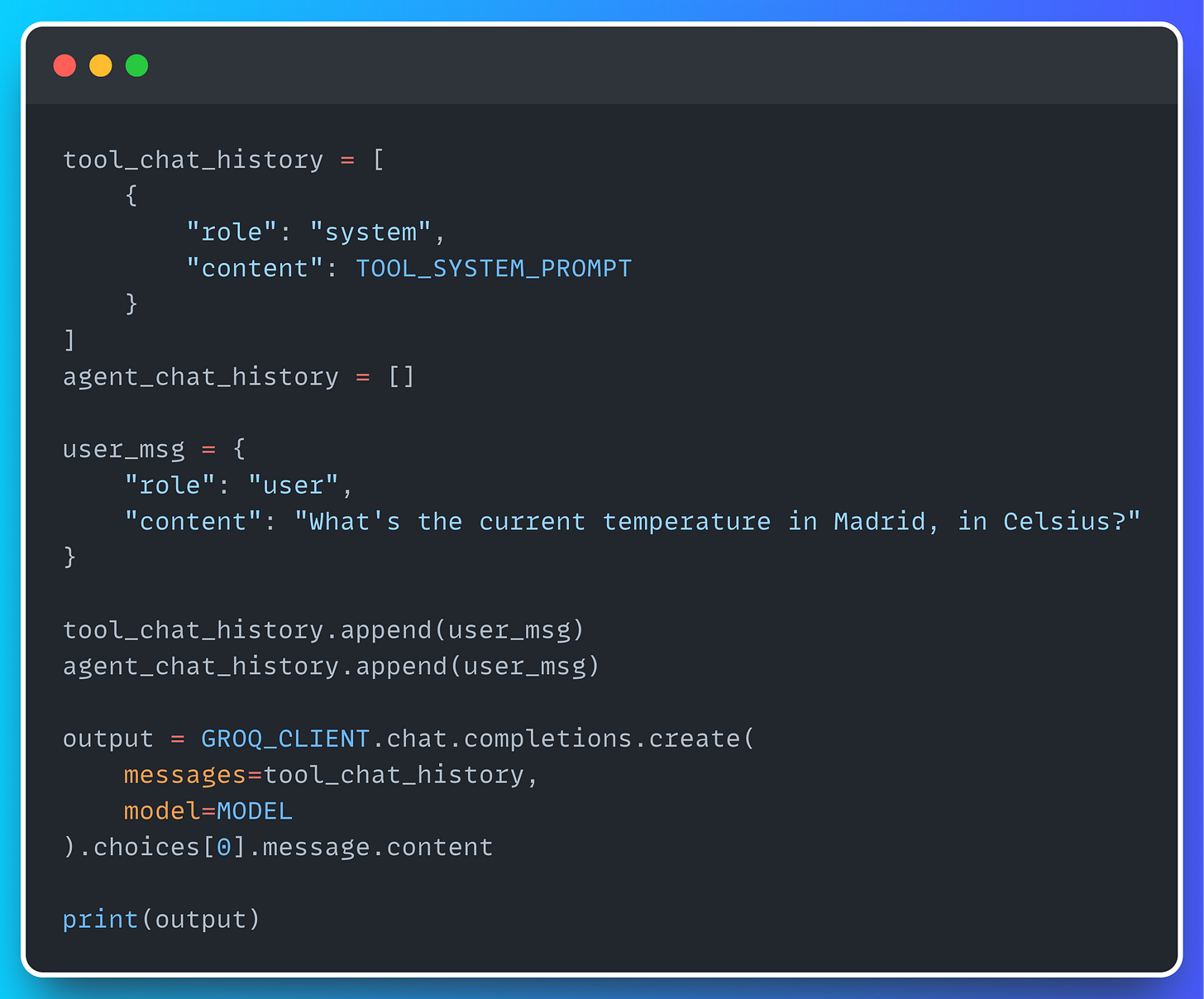

Let’s see how it works in practise! Let's ask a very simple question: “What’s the current temperature in Madrid in Celsius?”

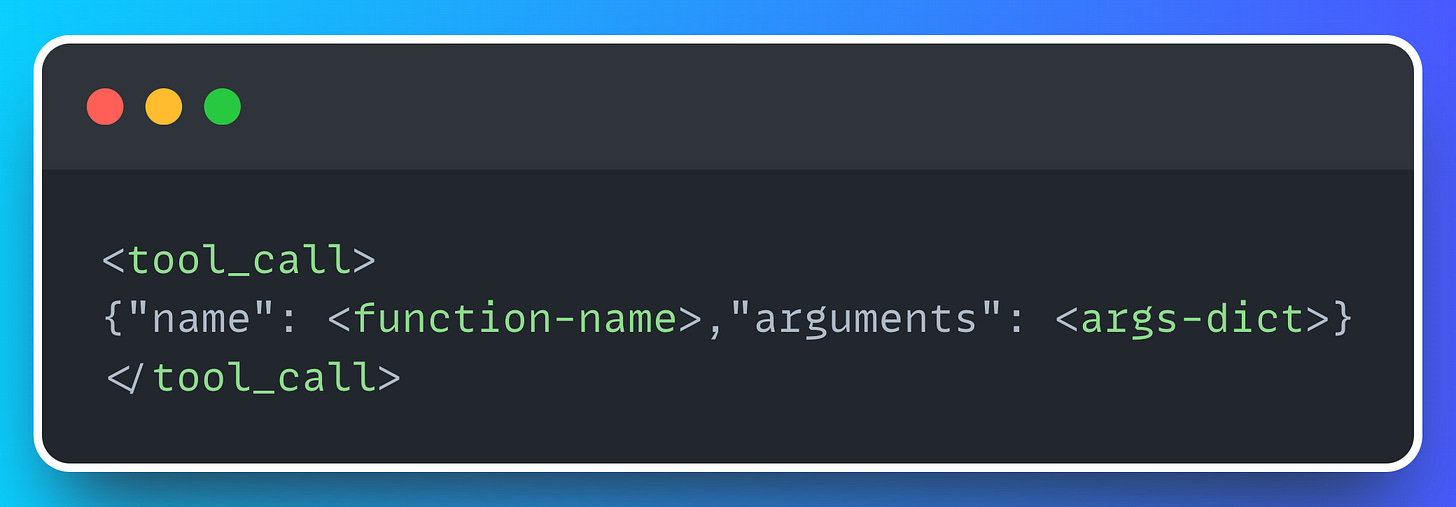

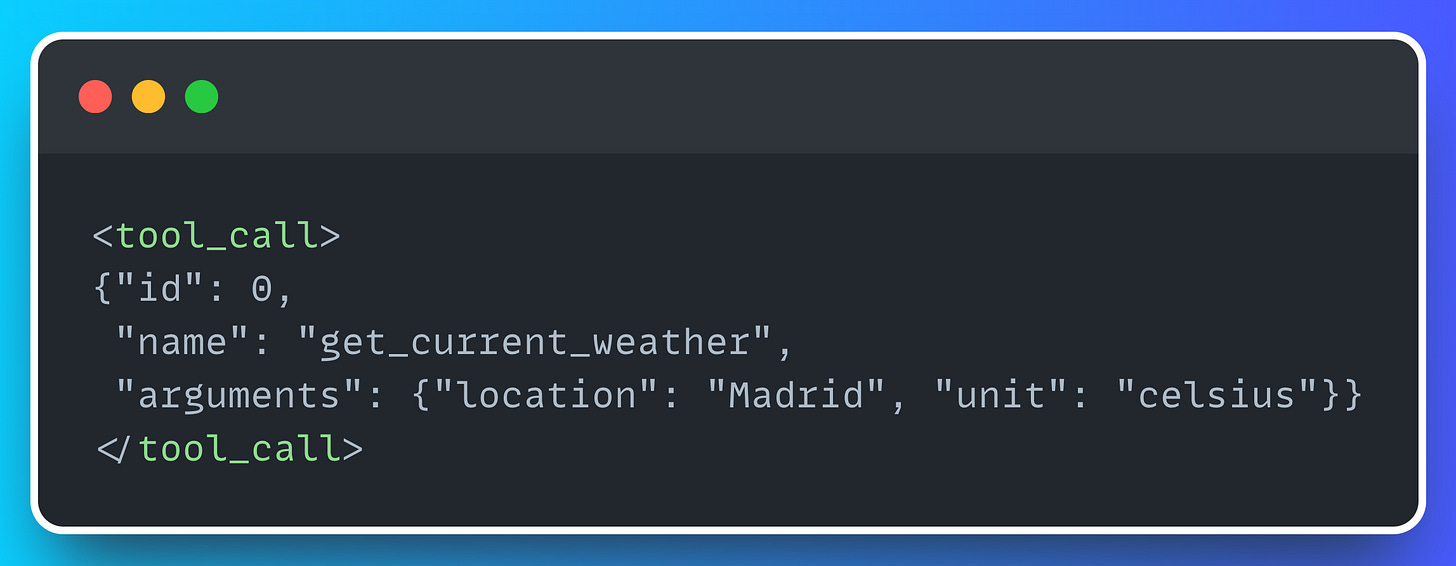

After running this code, we should see something like this:

That's an improvement! We may not have the proper answer but, with this information, we can obtain it! How? Well, we just need to:

1️⃣ Parse the LLM output. By this I mean deleting the XML tags

2️⃣ Load the output as a proper Python dict

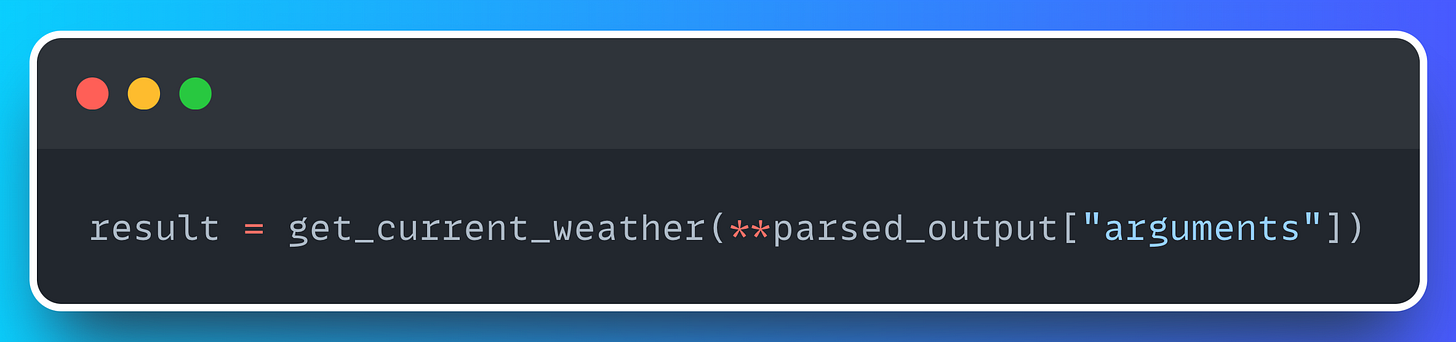

These two steps are straightforward to implement, as you can see in this Python module. With all the arguments in place, running the function is now a simple task 👇

If you run the function above, you’ll get the following result:

'{"temperature": 25, "unit": "celsius"}'Exactly what we expected from the get_current_weather function! A temperature of 25 degrees Celsius in Madrid!

Now, we can simply add the parsed_output to the chat_history so that the LLM knows the information it has to return to the user.

In my case, this is the output returned by the LLM:

The current temperature in Madrid is 25 degrees Celsius.And this, my dear friend, is the way a Tool works under the hood!

Easy, huh? Now it’s time to create a tool decorator, just like frameworks such as LlamaIndex, CrewAI or LangChain do!

Tool Decorator

To recap, we have a way for the LLM to generate tool_calls that we can use later to properly run the functions. But, as you might imagine, there are some pieces missing:

1️⃣ We need to automatically transform any function into a description like we saw in the initial system prompt.

2️⃣ We need a way to tell the agent that this function is a tool

This can be accomplish using an elegant solution, the tool decorator, that will transform any Python function into a Tool object. You can see the implementation of the tool_decorator and the Tool in the repo!

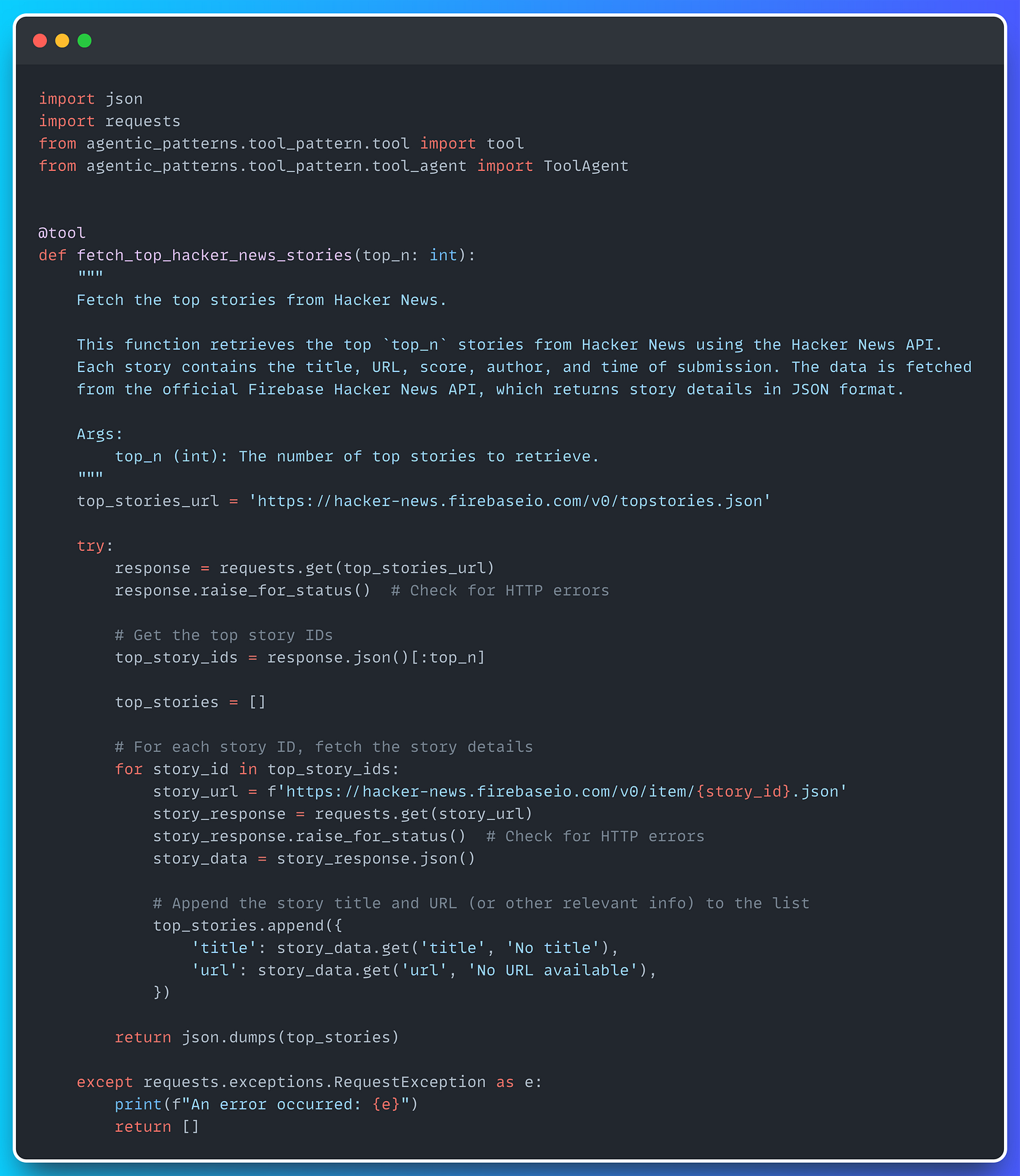

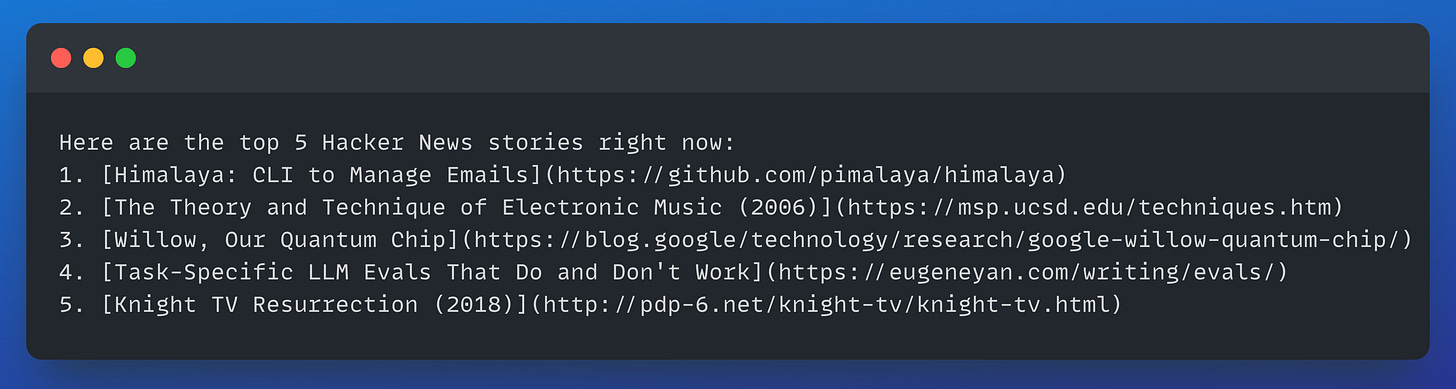

To see the tool decorator in action, we’ll build a tool that interacts with Hacker News, fetching the top n stories.

The block of code above will transform the Python function - fetch_top_hacker_news_stories - into a Tool.

The Tool has the following parameters: a name, a fn_signature and the fn - this is the function we are going to call, in this case fetch_top_hacker_news_stories.

Under the hood, the Tool class will generate the function signature automatically. You can see how the signature is extracted here.

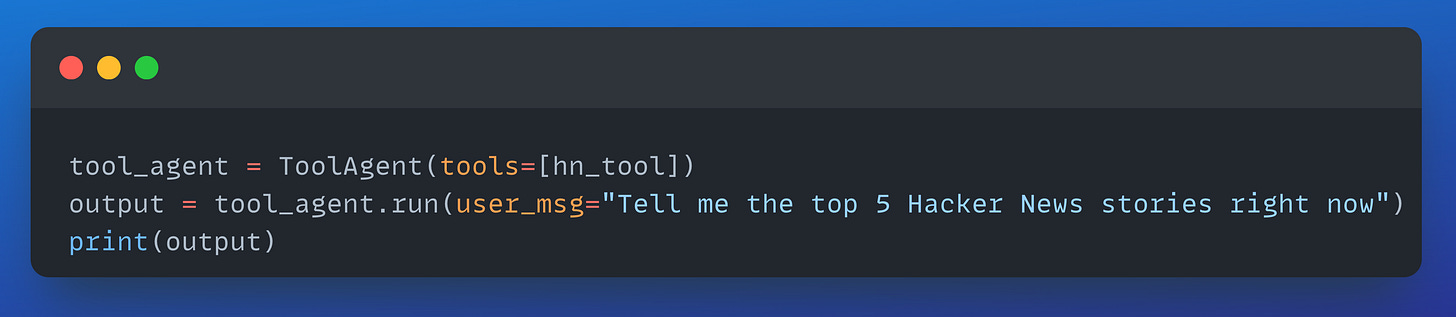

Now that we have a tool, let's run the agent.

And there you have it! A fully functional Tool! 🛠️

If you prefer video lectures, I also have a YouTube video covering the Tool Pattern! 👇

That’s all for today! Next week, we’ll talk about the Planning Pattern, the ReAct technique and how to implement reasoning heuristics for our Agents.

Happy tooling! 👋

Miguel